- Home

- Ways to cache your Serverless applications

Ways to cache your Serverless applications

Hello everyone, welcome to a new devspedia story. Today I'll talk about different ways to cache your serverless application, assuming an AWS-based architecture.

Hint: You can rapid start your serverless project by using Serverless framework.

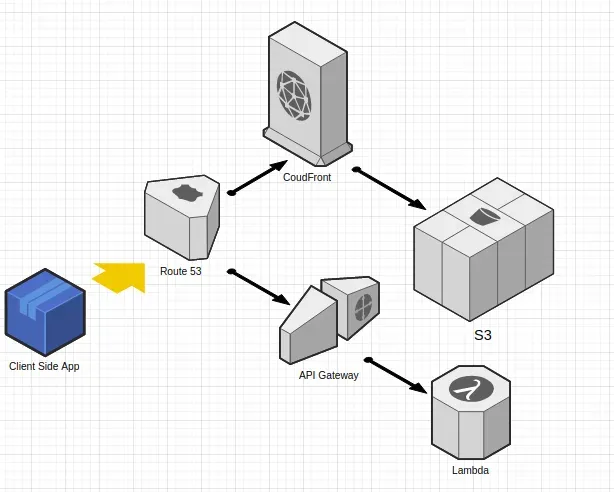

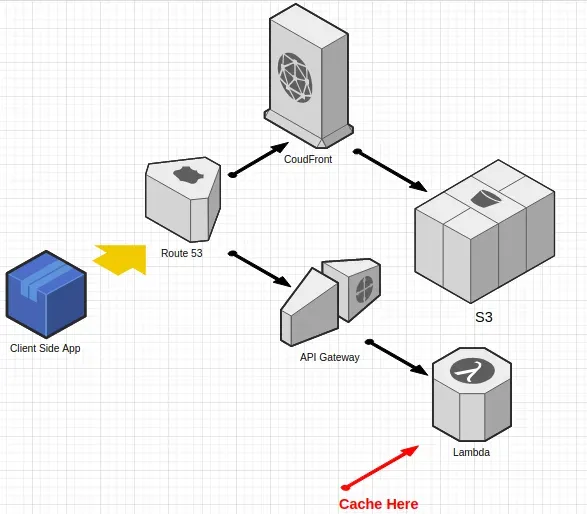

Core system components we'll be discussing

- The client-side app.

- Route 53.

- CloudFront.

- API Gateway.

- Lambda.

Here's how these components are stacked

As you see in the flow above, we have 4 places where we can utilize cache:

- In the client side.

- In CloudFront.

- In API Gateway.

- Inside your Lambda function.

Developers prefer to cache on the client side where possible, it's both cost efficient, and offers the lowest possible latency.

Let's discuss them one by one.

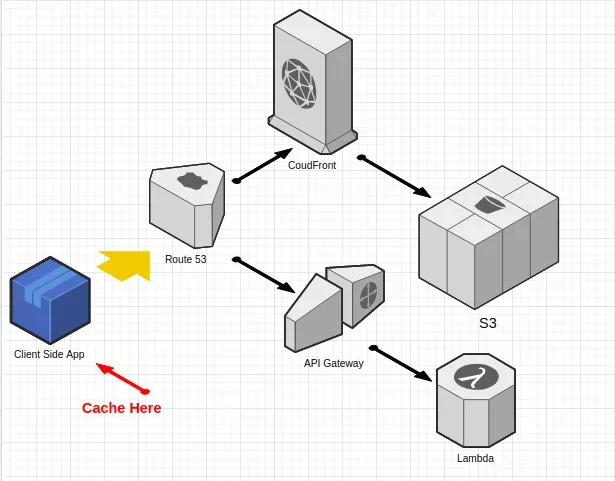

Client side caching

By caching on the client side, we can allow best possible low latency, since no requests will reach next component – Route 53 – which means basically skipping latency and execution of the rest of the stack.

That happens by using caching techniques such as Cache Control headers, ETag, Web Storage,.. etc

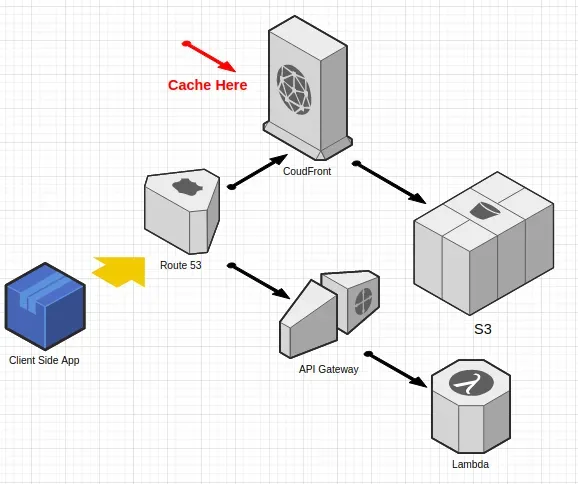

Cache on CloudFront

By caching in CloudFront, we've skipped 2 actually fetching a file from S3, this makes response time a lot faster, due to serving the cached version from edge locations distributed around the world, meaning that the client will always get a cached version from the nearest location.

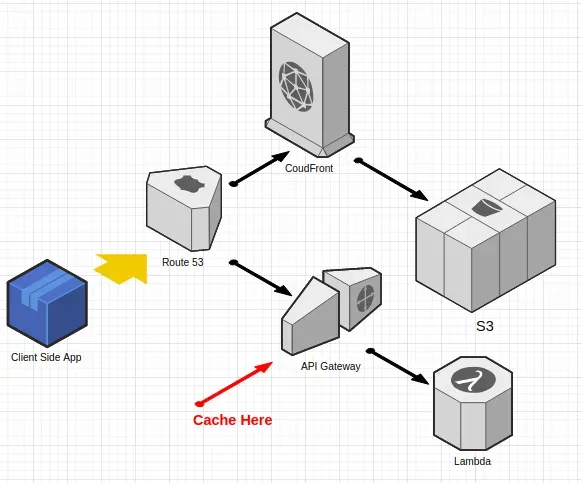

Cache on API Gateway

API Gateway allows caching on their side, internally, it's utilizing CloudFront to cache across edge locations, there's a limit on how long you can keep API Gateway cache, which is up to 1 hour.

API Gateway caching is powerful, you can cache via request cache headers, URL query parameters, path parameters, and more.

Cache in your Lambda

There are multiple ways to use caching when the request have finally actually reached your lambda function.

First common case, is by adding an extra component such as ElastiCache, which is a fully managed services for RDS or Memcached, where your Lambda can start requesting them to see whether there's a cached version of a piece of data.

This is a common practice, and many developers already use this in production.

However there's also another way to utilize caching inside your Lambda by by utilizing invocations-shared code, check the example below:

// Code outside of the scope of the exported Lambda

const cache = {};

exports.handler = async function(event, context) {

if(cache.user) {

return user.cart;

}

const user = event.user;

return user.cart;

};

In the above example, we've assigned a shared const cache inside the

Lambda function, this variable will be cached across all Lambda invocations.

So as long as the Lambda is being requested and is warm, since we mentioned warm and cold states of a Lambda, I'd like to share this excellent article that describes them in details:

That's all, thanks for reading devspedia, I love you, and I'll see you the next time :)

This article was written by Gen-AI using OpenAI's GPT-3.

1277 words authored by Gen-AI! So please do not take it seriously, it's just for fun!

Related

-

How to authenticate your JavaScript SDK using AWS Lambda & Serverless

-

Understanding AWS Serverless Application Model (SAM)

-

Managing Complex Workflows with AWS Step Functions

-

Comparing Webhooks and AWS EventBridge for Receiving External Events: Pros and Cons

-

Breaking Down AWS SAM: The Serverless Application Model

-

Embracing Serverless: Why You Should Consider AWS Lambda for Your Next Project

-

Unlocking the Potential of Serverless Architecture with Golang

-

Leveraging Serverless to Build Scalable Web Applications

-

An Introduction to Serverless DevOps: How to streamline your Cloud Infrastructure with AWS CDK

-

Mastering Serverless Architectures: A Comprehensive Guide for Developers

-

Navigating Serverless: A Deep-Dive into Google Cloud Functions

-

Mastering Serverless Framework: A Comprehensive Guide for Developers

-

Harnessing the Potential of JAMstack with Serverless Architectures

-

The Ultimate Guide to Caching Strategies for Serverless Applications

-

Leveraging AWS Lambda Layers to Share Common Libraries Across Functions

-

Optimizing Serverless Cold Starts: Best Practices and Strategies for AWS Lambda